We have started moving all our internal services from our local servers to cloud based. One big part of that job is to move all test environments. We took the opportunity to the revise that infrastructure. Before, our environments were pretty much static. One Tomcat per application and communication between them was set up in the products’ configuration modules. One ActiveMQ instance with a lot of environment prefixed queue names to communicate between applications. A pretty messy setup actually.

As long as we had a sufficient number of environments we could deploy new versions quite easily. The obvious problem is that the number of environments increase and that it requires manual steps to spin up a new environment.

Another flaw is that the test environment also differs too much from the production environment since multiple test environments run on the same host. And what about the production environment. How is it set up? Well, we have documents describing the packages needed, firewall configuration, applications’ locations and so on but none of these are 100% accurate.

Docker to the rescue

We do not want to have a limitation on the number of test environments we can run. We want our environment configuration (installed packages, scripts, configuration files, environment variables etc) to be versioned in a VCS. Setting up a new environment must go fast!

Marcus Lönnberg recommended Docker after using it quite a while. Docker is a Linux container engine with means to do lightweight virtualization. Images are built and can be used in any Linux environment compatible with Docker. Images are built hierarchical on top of each other which enables image reuse and avoids configuration duplication.

We run a private repository for our Docker images where we can push updated and new images to and pull down images from. All our Docker files and image build scripts are kept in Git, so all changes made to our environments are version controlled in contrast to the mutable production environment we are running today.

We have just started using Docker and completed our first story that enables us to create a new test environment involving four containers.

- ActiveMQ

- SpeedLedger accounting system (app container)

- Login and proxy (app container)

- Database

These containers constitute one test environment. They are tied together when started by giving them links to each other. That way the containers can use link names to communicate. This is how we start up a new test environment currently.

lis@lis-vm:~$ cat launch-test-environment

#!/bin/bash

NAME_POSTFIX=<code>date +%Y%m%d-%H%M%S</code>

DB_NAME="oracle_$NAME_POSTFIX"

JMS_NAME="activemq_$NAME_POSTFIX"

APP_NAME="accounting_$NAME_POSTFIX"

PROXY_NAME="proxy_$NAME_POSTFIX"

set -e

IMAGE_PREFIX=docker-registry.speedledger.net

echo $DB_NAME

docker run -t -d -p 1521 --name $DB_NAME $IMAGE_PREFIX/oracle-xe:sl

echo $JMS_NAME

docker run -t -d --name $JMS_NAME $IMAGE_PREFIX/activemq

echo $APP_NAME

docker run -t -d \

--name $APP_NAME \

--link $DB_NAME:oracle \

--link $JMS_NAME:activemq \

$IMAGE_PREFIX/accounting:ea6757a8090d

echo $PROXY_NAME

docker run -t -d -p 8080 \

--name $PROXY_NAME \

--link $DB_NAME:oracle \

--link $APP_NAME:accounting \

--link $JMS_NAME:activemq \

$IMAGE_PREFIX/proxy:d277d11f1376

#!/bin/bash

NAME_POSTFIX=<code>date +%Y%m%d-%H%M%S</code>

DB_NAME="oracle_$NAME_POSTFIX"

JMS_NAME="activemq_$NAME_POSTFIX"

APP_NAME="accounting_$NAME_POSTFIX"

PROXY_NAME="proxy_$NAME_POSTFIX"

set -e

IMAGE_PREFIX=docker-registry.speedledger.net

echo $DB_NAME

docker run -t -d -p 1521 --name $DB_NAME $IMAGE_PREFIX/oracle-xe:sl

echo $JMS_NAME

docker run -t -d --name $JMS_NAME $IMAGE_PREFIX/activemq

echo $APP_NAME

docker run -t -d \

--name $APP_NAME \

--link $DB_NAME:oracle \

--link $JMS_NAME:activemq \

$IMAGE_PREFIX/accounting:ea6757a8090d

echo $PROXY_NAME

docker run -t -d -p 8080 \

--name $PROXY_NAME \

--link $DB_NAME:oracle \

--link $APP_NAME:accounting \

--link $JMS_NAME:activemq \

$IMAGE_PREFIX/proxy:d277d11f1376

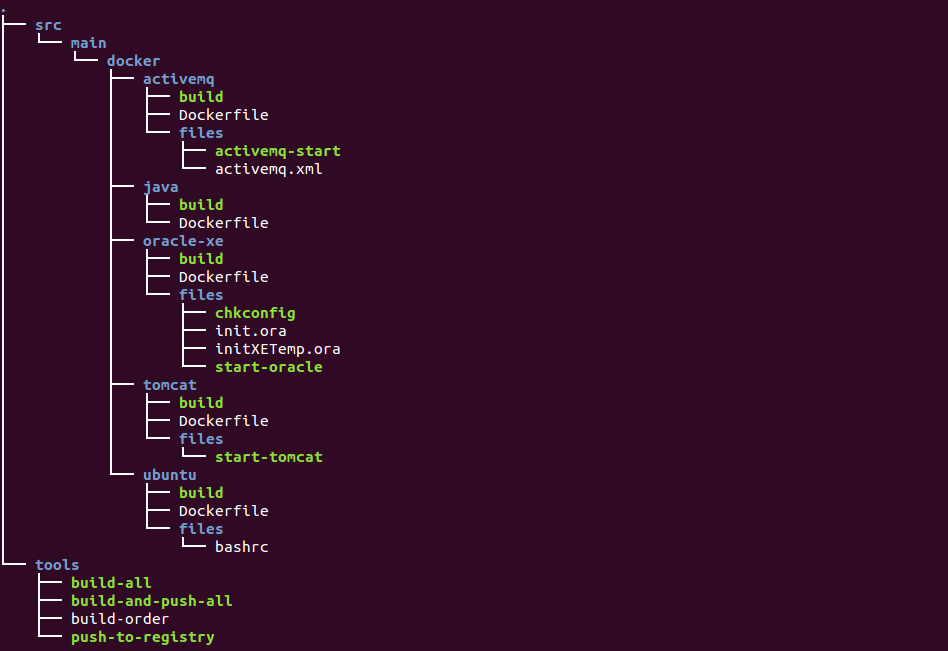

We build up our containers in a hierarchy where ubuntu is the base image. App container docker files reside in each app’s repository.

All other images are built up by source in a separate repository. Below is a snapshot of the current source structure of that repository.

Every time Jenkins builds our applications we plan to build a new image identified by application VCS changeset branch and hash. This image is based on a tomcat image and has the newly built war file contained. The image is then pushed to our docker registry and ready to be pulled down when spinning a test environment. We might just do that automatically for every change until we see that the number of concurrent test environments is unreasonable high. The big advantage is of course always having a test environment ready without even pushing a button in Jenkins.

To avoid having too many containers up and running at the same time we will probably have a monitoring application that stops running containers if they have not been used for some period of time.

Using it in production

So far we only use containers for test environments. A nice side effect by running lightweight containers in the cloud is that we could quite easily turn a test environment into a production node given an infrastructure that supports multiple simultaneous production environments with a controller in front registering existing environments. We simply have to switch out the database link for the app containers and direct some amount of traffic to it.

By monitoring our environments we could make sure an environment with high error rate automatically would be stopped and stop traffic to it. A successful new environment (zero errors) would be given more and more traffic until old versions are out of service and a new environment is starting up. Realistically we would hava a couple of different versions up and running as our stories are completed, automatically built by CI and pushed to production. As we are committed to increase our team velocity and decrease cycle time, more and more stories will be completed per time unit. That implies an increasing number of production deploys and probably more concurrent running environments.

I have seen some variants of this architecture in presentations given by Twitter and Netflix. Netflix announced Asgard as Open Source back in 2012.

This is our plan to solve the problem with having a static type of test environment infrastructure. It would be interesting to hear about your experiences. How do you address the complexity of having n test environments?